Below is the starting of the spring 2025 work log .

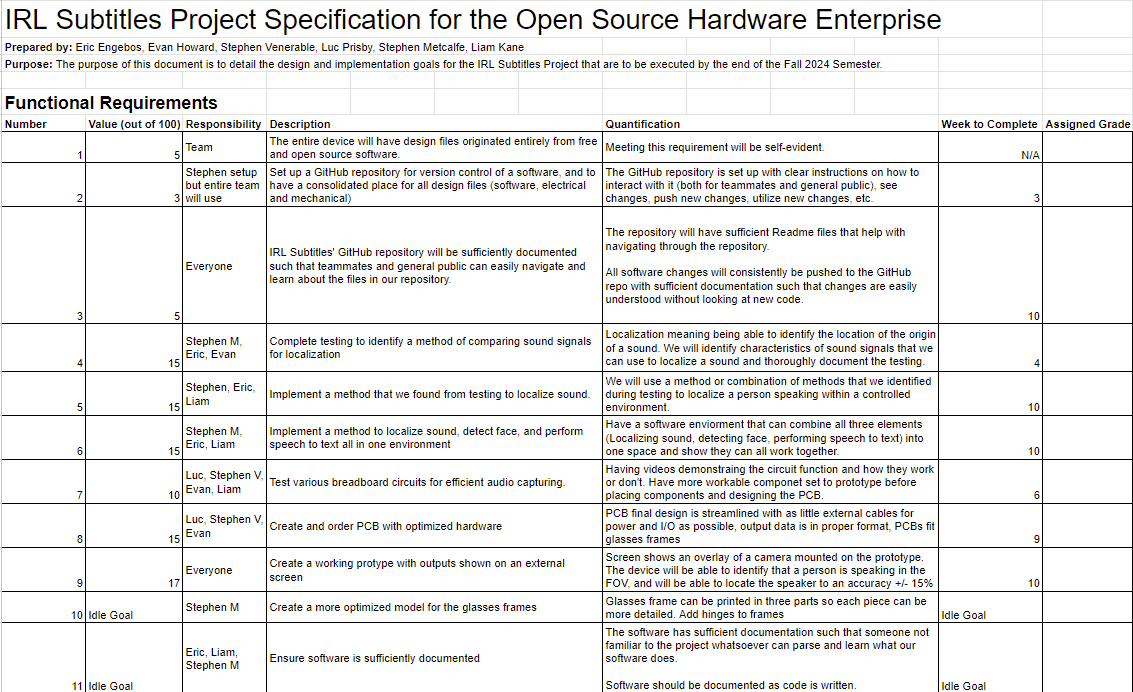

In the first week me and Liam got our team together the Wednesday before our first meeting and wanted to get the the project spec sheet done and meet our new group members we have lost three from last semester and gained two. Before we met me and Liam got on a call and got a list of things to talk about. When we met in person we had a list of topics to go over. Our team did introductions then we spent time informing our new group members of the project and what was done last semester along with where we want to be at the end of this semester with the project coming to a end. We also got our roles for the semester with the enterprise this week below is a copy of the project spec that we have made and will use throughout the semester for the work that we do and reference back to to make sure we are making good progress.

( PROJECT SPEC SHEET)

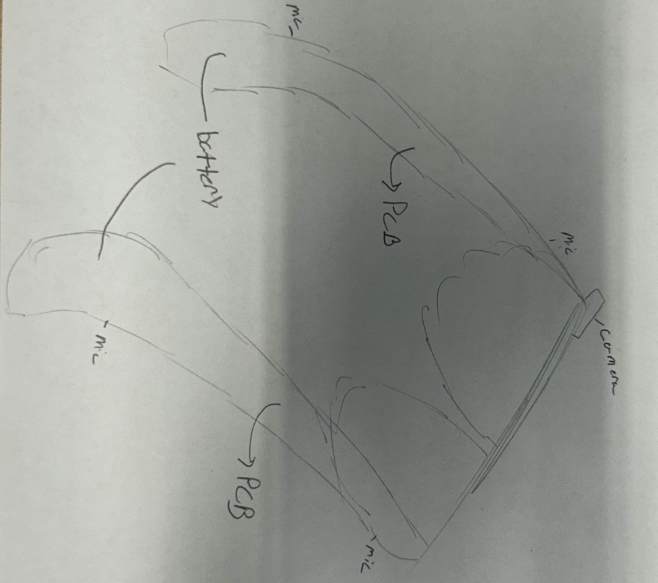

Now that we got our spec sheet back we had to make some updates to it and our team met to get it updated and returned in to ensure a successful semester. Another thing our team talked about when met to do the updates is who will end up doing each task and talked about who has what skills to make sure we are going to do the best we can as a team I am going to be working on the speech to text and the sound localization optimization. I started looking into different ways to implement a better way to do the speech to text for closer to 50% in the captions I have research ready to present to the team and hopeful be able to get started on working on the program next week. I also started to look into the audio input and how we are getting to make sure the quality of input is good enough for the speech to text to work. Since that was a issue in the past. I also started a project presentation that will be given in week 3 of the semester. during the whole enterprise meeting time. Below I am adding a sketch of what we hope for it to look like at the end of the semester.

Next week the things I want to work on are; Start working on audio testing, Start working on fixing the speech and making and giving the prestation.

One thing that I am concerned about is getting the speech in the text box and for it to follow.

This week I did a decent chunk of my team role for the enterprise I went into the lab swept the room cleaned off the counter and cleaned the sink along with some other little things around the lab that were left out. I am expected to get 5 hours of my “Lab Cleaner” task before the end of the semester I will do this a few times throughout the year to conclude my task.

Picture()

The project work I did for the week is finished the presentation and gave it with my team during our 11am slot. Along with some other small work

This week after the prestation we started doing testing on the microphones that we have. We were looking into our old model but have to take a step back because of how bad the words are so we are breaking the input down since we cant get the words to work so this week we are going to start with just the audio we are talking and making a plan with what to do we are going to come up with a few things to say every time to scale the results that we can get along with later in the semester. we made a little script just to pull data from the mics that took a little just to get the mics to save to a file rather then be live but we eventually got the audio playing back as clips that were clear enough there was a little noise but not enough to make difference in the translation as we think it needs to be clear. That script is in the github if it wants to be seen Along with that we also had our weekly meeting and had a little cleaning time on my lab role . for next week I would like to start looking into the face detection.

This week we had our weekly meeting and started talking about what we all have done the frames have come a long way along with the pcb from john and Stephen so we got our cdr time and started making a plan for that who was going to do each topic even though it wasn’t for a little bit. Liam was working on the odias and I had the speech to text. I got the face detection working to how it should the box’s did not stay in the correct location as they should but now there is now issues even when there is words on the screen as that was a problem last year. But now the words no face can pop up even when the box is still by the fave just as a test the words have not been put back into the face detection code yet. ((( picture )). Next week i want to get the speech to text working in some manner before cdr

This week I got the cdr presentation looking better since it is on Thursday and I worked on a few different models whisper and faster whisper I tried a online and off line model to compare speed and correctness along with what is the easiest to use with wanting to stay offline I was able to Keep the offline model as I eventually got it work like a small buffer of about 0.1-0.3 seconds to transcribe so there is a little delay but the words are not right. I think CDR went well and we are in a good spot for the future weeks. Next two weeks I want to get the PCB ordered and the speech back on the mics

This week we had out meeting it went well as usual but I am struggling to get the words back into the face detection in a neat manner that is what I will be working on until it is working. me and Liam are both working on this together to try and get it done while we are waiting for the boards to get here and the new frames to be done. Next week we are going to work on this more.

This week we had out meeting after spring break made sure everyone is doing good and started to make a plan for the rest of the year me and Liam are going to keep working on the speech to text and the audio localization which we are using odias for.

I am new to this project this year for my 2 capstone semesters. The first thing I did was talk with my new group members and find out more about the project them I already knew. I then helped the team pick out goals “project specifications” for the semester that we will complete and we divided the tasks up for group of people and delegated some to certain group members that wanted to do them. Below here is what we came up with for the semester. Also below Is a sketch of what the project might look like when it is done.

After the Project goals were decided on they got marked up and we tweaked them to fit better what is achievable for the semester. I then did some digging into the components that are team has already and what process were found last year to see where to start. I found the camera and the microphones which we will use for testing and taking in video and audio inputs. I talked with a audiologist on how the ears and hearing aids find where audio is coming from and how it is located. based on some of the findings I started looking for some open source audio software that I can analyze sound inputs by looking at the amplitude and time variance of the inputs. Audiometer, Audacity, and reaper were used to take in inputs from the microphones that we have to do testing and get audio samples. The Microphones were clipped the the sides of the glasses while there was talking and clapping being done for the audio sample now we are in the process of analyzing the sound to find a method to localize the input location. we are taking the amplitude of the signal/ sound and the time variance and finding what will be the better option if we cant use both. Below is one of the audio programs that was used for audio input.

I have also downloaded a linix virtual environment to work on programing that can be used with the GitHub.

For next week I want be able to find more into how to analyze the sound faster and find a way to split the Audio inputs into two while still being able to watch the input. I also want to be able to analyze it live rather then watching a prerecorded input. One more thing I would like to do If there is time is to formulate a plan on how the sound will be located with either the amplitude or time difference. The Concerns I have are slim but they are finding the correct program to use that will work in the linix environment.

This week I was able to use the virtual environment to analyze audio inputs. A python program was used to take the inputs in from the pre-recorded audio and find the peaks. At first it wasn’t to precise but with some adjustments more of the peaks were found at a more accurate and efficient rate. After getting a base program A few more audio files were recorded to get a variance of testing and try it on more then just fast quick clapping. One recording has the microphones on the glasses while on a head with words and a clap and more words while another recording was with words and the person wearing the glasses faced me the speaker while turning 45 degree and looking 90 degree/away from the speaker. with those audio files there had to be more adjusting to get the audio to match up they were cross correlated in the program to get rid of the error of time in the microphones. After the testing the team has came up with a method that we think will work for localizing the sound/audio input. A combination of the amplitude and the time difference with the camera is what we will use to find the location of the input. After the testing was done with the new audio I started looking into how to better get the amplitude and I looked into the total energy of the signal and the area of the curve along with trying to find what peak is higher at different point of the audio. I am still learning how python works but so far it is going well and I am figuring out the syntax and how to take in what I need to get done It is something I am enjoying learning. The goal I have for the next week is to finalize a method and plan with the team to find what we want to use and how we want to combined what we have to make out method work together. Along with making the program more robust. I would also like to talk with the team to see what ideas we have to implement these into one environment.

This week we met and got some chips ordered along with a few dev boards that we can use/ do testing on in our weekly meeting we also came up with the thought that we ae going to need to convert our programs to C rather then python. I then Spent some time this week converting my python code to C for the testing and sound localization it was a little difficult with different libraires being used. When our group get our programs converted we are going to try and get them to work with each other the timing, the amplitude and the face detection all working together to track a speaker for the person wearing the glasses. I also spent some time this week doing the lab manger role that I am apart of for keeping up the 3d printers. In the next week our team will make a slide show presentation for the CDR along with working to get all of our programs in the same environment.

This week I spent some time making my C program for amplitude more acerate so rather then taking sample points throughout the audio sample I am taking a few points that will be grouped together and average them together to get to be more arcuate. I ended up getting some good results for that with the difference in DB between the audio samples. We have received the dev board and the esp32 chip I then spent some time getting familiar with connecting to it and working on creating projects with it I was able to run my program on the esp32. I then spent some more time creating new projects and finding how we will be able to implement more onto it. During a meeting we had later in the week our team was able to get a circuit built on a bread board to get audio ran through it. Our team spent a some time making CDR slide and giving the presentation this week as well we got some good feedback from questions that were asked during the presentations. we now have an idea of what we want to he wording output to look like. We are going to for now as a proof as concept have a monitor display the words rather then a HMI application that we thought of. Along with some hardware changes that we can now make by using a different op amp for less noise. we also decided that we will continue to use the plug in rather then a battery as of now. After the prestation we came up with what we want to get done next week and when we want to meet again. We will be meeting twice and working getting the programs on the dev boards and working well before we move onto the next steps of getting them together. CDR slides https://docs.google.com/presentation/d/1LLZVMqUv66nTDsn91qqaQaRp4XvlmwcXdzSi5R_cPrs/edit#slide=id.p

Since the last post I am made I had two weeks of work and team activity. Our team had two meetings one each week and then a few sub meetings in things that we wanted to collaborate about We have found that we can still use the python code with the computing on the computer that we had from the CDR. In the first week we had out meeting to give updates about what we have all found then split off what we all want to do from there for the rest of the week until the next meeting. I was going to find the best way to connect the microphones and cameras to the esp32 and have them communicate with each other. I have found that we can se Uart and I2s with a combination of them. Another thing that was decided was that we will have 4 microphones and one camera as in the issue of size for the one camera we would like to have two for depth perception but for now we will just have to have one as the frame does not have a good spot to mount the camera at the moment. In this the inputs will go to a esp32 with that sending to two master esp32s the rtc of the master esp32 will align the data. I also spent some time on looking at the frame design and trying to find a way to redesign it in a way that the camera can mount in a effective way that wont block and vision and in a way to make the glasses more realistic. Although I did nit redesign it I have a better understanding of the plan for the redesign and print. We will try and have the glasses be three pieces in the print so that the frame can bend a little and be able to close like typical glasses. I also looked into open cv for the display as I didn’t know to much about it but from what I found I don’t think our team should use it and should go with a different direction for the display case I found that ignition HMI could be used for the camera. Our group also met again later in the week to look at possible camera option that we could buy to mount on the frame and that would work with the process that we have set right now. We have found that we want to get something around a 0.3mm camera that will be small enough to fit while still giving us good enough info for the face detection. Although this week a decision was not made on the camera that we want to buy yet. In the next week our group had another meeting on Tuesday since fall break is this week and a few of our team members including my self are leaving for the break and going home we all brought up the topics that we have found from the last week and came up with a plan with what we want to work on this week and over break and what we want to cut or add from the pervious weeks work. In the meeting we also decided on a camera that we are going to get for the frame which is the link attached. https://www.amazon.com/Espressif-ESP32-S3-EYE-Development-Board/dp/B09MS6PH7L . for this week I was finding how we can get the master esp32 to connect to the computer there is the option to plug in but I think it would be better to try and get it to connect over WIFI in a sense of not having any wires going from your head to your pocket. I then spent some time finding on how that work and how to get the connection made I found that there is a code that can be run and some thing have to be set but then after getting it to join the same network once this is done the esp32 can be connected to through he computer by its Ip address. The esp32 will have to act as a server on the WIFI. I then also spent some time looking into how we can get the video feed into ignition with our other programs by looking at a project I did in the past with ignition I found that the video can be streamed to it and for the other programs and test they can be introduced with the python code as ignition is based on python and will have to use a container with set point to get the words to line up but I don’t think that will be as bad as just getting the glasses connected to the computer. The things for the to do list next week are to have our meeting and talk about what everyone found and see what we want to use and not use and go from their to make a better to do list but I believe we are going to want to order the pcb board and once it gets here power it up.

In the past week I have been working on getting the esp32-s3-eye connected to a computer over WIFI as a access point the access point was chosen as the method because then it would not need a external internet connection in order to function. I first wrote a code that made just the wifi appear and make sure that I was able to get it to come up. Once i was able to get that working I started to implement the esp32-s3-eye to connect to a web browser by typing in http:ip I then was able to see the text that I have displayed in my code that said hello from the esp-eye. I then started working on a program for the streaming of the video which gave me a lot of trouble in finding the correct pins I have the wifi connected and then based on that we had our weekly meeting we talked about solutions that could fix it. Later in the week In a meeting our team found that one of the pins in my code for the cameras pin configuration was swapped when that was fixed the camera was able to stream to the web browser over wifi when the /stream was typed in after the http:ip/stream. ( picture )For the next week until we have our check off the to do list I and our team have is to get the rest of our requirements done. I will be working on making a python code that can take data from the web page and get it into open cv that way the localization methods can be used under one environment. If we are able to get that done by the check off date we will be done with all of the things we said we would be done with.

I have now got the python script to pull from the web server that the eye is sending the mjep to over wifi so I am able to view the video format mjeg that The camera is sending and with Evans help from my group we were able to get the face detection back onto that when it pulls from the web sever. Although one interesting thing that we noticed is that when the web ever is open the script will not pull from it it has to be closed. Now the only thing left to do since we have them in the same location is to add the audio to this method. and after the audio is in there the prototype should be working. When there is a face in the field of view it is almost 100 percent.

Now our team has got the video working over wifi with the scraping of audio. The prototype as of now can use the face detection to find the faces that are in the field of view and can take the audio input in and display text in the box below the face while the cross correlation was able to find the general location of the speaker that was talking. The speech to text wasn’t as precise as we want it to be that will be something we will keep improving upon in the next weeks and semester. The frames and pcb were also redesigned and reprinted and look such as;

The next thing our team Is doing to do in the next week and finals week is to finish the repot that we have for the project along with the making a to do/ plan for the next semester so that when we get here we can start working right away rather then making a plan/schedule for a week or two. Our team also has the GitHub all up to date and are working on making the documentation very good for it.